We couldn’t be more excited to attend VOICE Summit 2019 this week in Newark, NJ! We’re eager to deliver a session on Building Voice Solutions for the Enterprise and engage with voice experts on the latest perspectives, developments, and trends in this exciting space.

In two previous posts, we shared how consumer engagement with voice drives demand in the enterprise space, and how voice technology is set to evolve rapidly in the next few years to allow features such as speaker recognition and emotional awareness. As voice technology becomes more deeply embedded in homes and workplaces, the technology is set to become a natural part of our lives.

In light of this forward-looking view, Kopius is excited to announce a new set of enterprise voice solution offerings, across both the Microsoft Azure and Amazon AWS platforms.

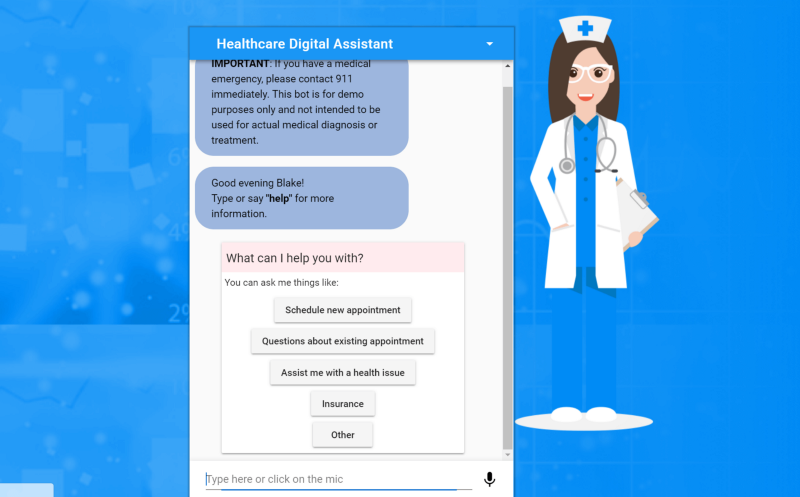

The newly released Azure Chatbot Services: 8 Week Implementation solution enables customers to get started quickly with their enterprise voice skill. Recognizing that standard access to company-related data and systems can be difficult, Valence is enabling conversational interfaces such as chatbots to provide a much more natural method of communicating with systems to retrieve data and perform actions.

To illustrate the impact of this technology in the enterprise, we have released a new case study on voice-enabled inventory management. Valence worked with SteppIR Communication Systems, a next-generation communications company based in the Pacific Northwest, to deploy a voice-enabled chat bot that provides easy access to key data sets. Built on the Amazon Alexa for Business Platform, our bot integrates with the inventory management system Order Time. SteppIR’s key challenge is to keep their product moving through the pipeline as quickly as possible without bottlenecks. With voice-enabled access built into their inventory management system, anyone can access information on part number levels, order status, and more.

“At Valence we focus on digital transformation technologies and how they work together to deliver real business results for customers,” said Jim Darrin, President at Valence Group. “We believe natural language interfaces — and specifically the ability to access enterprise data with voice commands — is one of the next frontiers in enabling easy access to all sorts of data in the enterprise. Both Microsoft and Amazon are making incredible advancements in fundamental platform capabilities, and we are thrilled to be a partner to both companies in helping translate these cloud services into business solutions for enterprise customers around the world.”

Given the success of this project deployment and the future trend of voice technology, we expect voice will unlock key efficiencies in the enterprise space and create new competitive advantages for companies.

Additional Resources: