By Yuri Brigance

This introduces what separates foundation models from regular AI models. We explore the reasons these models are difficult to train and how to understand them in the context of more traditional AI models.

What Are Foundation Models?

What are foundation models, and how are they different from traditional deep learning AI models? The Stanford Institute’s Center of Human-Centered AI defines a foundation model as “any model that is trained on broad data (generally using self-supervision at scale) that can be adapted to a wide range of downstream tasks”. This describes a lot of narrow AI models as well, such as MobileNets and ResNets – they too can be fine-tuned and adapted to different tasks.

The key distinctions here are “self-supervision at scale” and “wide range of tasks”.

Foundation models are trained on massive amounts of unlabeled/semi-labeled data, and the model contains orders of magnitude more trainable parameters than a typical deep learning model meant to run on a smartphone. This makes foundation models capable of generalizing to a much wider range of tasks than smaller models trained on domain-specific datasets. It is a common misconception that throwing lots of data at a model will suddenly make it do anything useful without further effort. Actually, such large models are very good at finding and encoding intricate patterns in the data with little to no supervision – patterns which can be exploited in a variety of interesting ways, but a good amount of work needs to happen in order to use this learned hidden knowledge in a useful way.

The Architecture of AI Foundation Models

Unsupervised, semi-supervised, and transfer learning are not new concepts, and to a degree, foundation models fall into this category as well. These learning techniques trace their roots back to the early days of generative modeling such as Restricted Boltzmann Machines and Autoencoders. These simpler models consist of two parts: an encoder and a decoder. The goal of an autoencoder is to learn a compact representation (known as encoding or latent space) of the input data that captures the important features or characteristics of the data, aka “progressive linear separation” of the features that define the data. This encoding can then be used to reconstruct the original input data or generate entirely new synthetic data by feeding cleverly modified latent variables into the decoder.

An example of a convolutional image autoencoder model architecture is trained to reconstruct its own input, ex: images. Intelligently modifying the latent space allows us to generate entirely new images. One can expand this by adding an extra model that encodes text prompts into latent representations understood by the decoder to enable text-to-image functionality.

Many modern ML models use this architecture, and the encoder portion is sometimes referred to as the backbone with the decoder being referred to as the head. Sometimes the models are symmetrical, but frequently they are not. Many model architectures can serve as the encoder or backbone, and the model’s output can be tailored to a specific problem by modifying the decoder or head. There is no limit to how many heads a model can have, or how many encoders. Backbones, heads, encoders, decoders, and other such higher-level abstractions are modules or blocks built using multiple lower-level linear, convolutional, and other types of basic neural network layers. We can swap and combine them to produce different tailor-fit model architectures, just like we use different third-party frameworks and libraries in traditional software development. This, for example, allows us to encode a phrase into a latent vector which can then be decoded into an image.

Foundation Models for Natural Language Processing

Modern Natural Language Processing (NLP) models like ChatGPT fall into the category of Transformers. The transformer concept was introduced in the 2017 paper “Attention Is All You Need” by Vaswani et al. and has since become the basis for many state-of-the-art models in NLP. The key innovation of the transformer model is the use of self-attention mechanisms, which allow the model to weigh the importance of different parts of the input when making predictions. These models make use of something called an “embedding”, which is a mathematical representation of a discrete input, such as a word, a character, or an image patch, in a continuous, high-dimensional space. Embeddings are used as input to the self-attention mechanisms and other layers in the transformer model to perform the specific task at hand, such as language translation or text summarization. ChatGPT isn’t the first, nor the only transformer model around. In fact, transformers have been successfully applied in many other domains such as computer vision and sound processing.

So if ChatGPT is built on top of existing concepts, what makes it so different from all the other state-of-the-art model architectures already in use today? A simplified explanation of what distinguishes a foundation model from a “regular” deep learning model is the immense scale of the training dataset as well as the number of trainable parameters that a foundation model has over a traditional generative model. An exceptionally large neural network trained on a truly massive dataset gives the resulting model the ability to generalize to a wider range of use cases than its more narrowly focused brethren, hence serving as a foundation for an untold number of new tasks and applications. Such a large model encodes many useful patterns, features, and relationships in its training data. We can mine this body of knowledge without necessarily re-training the entire encoder portion of the model. We can attach different new heads and use transfer learning and fine-tuning techniques to adapt the same model to different tasks. This is how just one model (like Stable Diffusion) can perform text-to-image, image-to-image, inpainting, super-resolution, and even music generation tasks all at once.

Challenges in Training AI Foundation Models

The GPU computing power and human resources required to train a foundation model like GPT from scratch dwarf those available to individual developers and small teams. The models are simply too large, and the dataset is too unwieldy. Such models cannot (as of now) be cost-effectively trained end-to-end and iterated using commodity hardware.

Although the concepts may be well explained by published research and understood by many data scientists, the engineering skills and eye-watering costs required to wire up hundreds of GPU nodes for months at a time would stretch the budgets of most organizations. And that’s ignoring the costs of dataset access, storage, and data transfer associated with feeding the model massive quantities of training samples.

There are several reasons why foundation models like ChatGPT are currently out of reach for individuals to train:

- Data requirements: Training a large language model like ChatGPT requires a massive amount of text data. This data must be high-quality and diverse and is typically obtained from a variety of sources such as books, articles, and websites. This data is also preprocessed to get the best performance, which is an additional task that requires knowledge and expertise. Storage, data transfer, and data loading costs are substantially higher than what is used for more narrowly focused models.

- Computational resources: ChatGPT requires significant computational resources to train. This includes networked clusters of powerful GPUs, and a large amount of memory volatile and non-volatile. Running such a computer cluster can easily reach hundreds of thousands per experiment.

- Training time: Training a foundation model can take several weeks or even months, depending on the computational resources available. Wiring up and renting this many resources requires a lot of skill and a generous time commitment, not to mention associated cloud computing costs.

- Expertise: Getting a training run to complete successfully requires knowledge of machine learning, natural language processing, data engineering, cloud infrastructure, networking, and more. Such a large cross-disciplinary set of skills is not something that can be easily picked up by most individuals.

Accessing Pre-Trained AI Models

That said, there are pre-trained models available, and some can be fine-tuned with a smaller amount of data and resources for a more specific and narrower set of tasks, which is a more accessible option for individuals and smaller organizations.

Stable Diffusion took $600k to train – the equivalent of 150K GPU hours. That is a cluster of 256 GPUs running 24/7 for nearly a month. Stable Diffusion is considered a cost reduction compared to GPT. So, while it is indeed possible to train your own foundation model using commercial cloud providers like AWS, GCP, or Azure, the time, effort, required expertise, and overall cost of each iteration impose limitations on their use. There are many workarounds and techniques to re-purpose and partially re-train these models, but for now, if you want to train your own foundation model from scratch your best bet is to apply to one of the few companies which have access to resources necessary to support such an endeavor.

Contact Us to JumpStart Your AI Success

Kopius supports businesses seeking to govern and utilize AI and ML to build for the future. We’ve designed a program to JumpStart your customer, technology, and data success.

Tailored to your needs, our user-centric approach, tech smarts, and collaboration with your stakeholders, equip teams with the skills and mindset needed to:

- Identify unmet customer, employee, or business needs

- Align on priorities

- Plan & define data strategy, quality, and governance for AI and ML

- Rapidly prototype data & AI solutions

- And, fast-forward success

Partner with Kopius and JumpStart your future success.

Related Services:

- Artificial Intelligence & Machine Learning Development

- Natural Language Processing (NLP)

- Data Strategy Consulting

Additional Resources:

- Free Download: Set Your Data Retention Policy Up for Success

- 3 Reasons Companies Advance Their Data Journey to Combat Economic Pressure

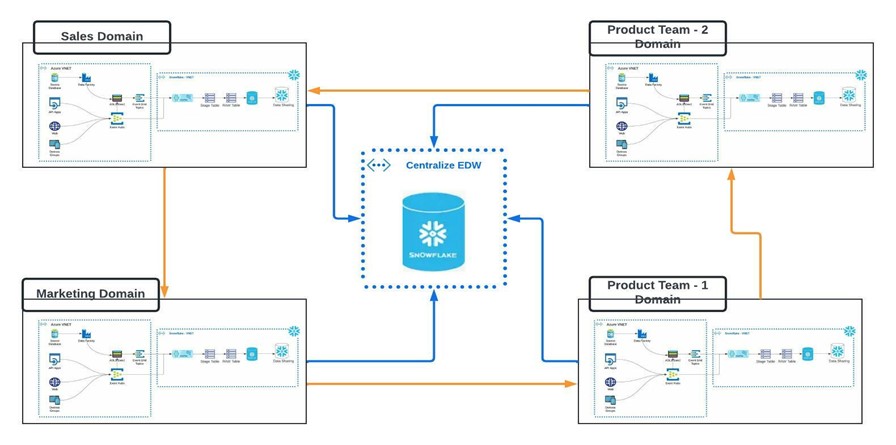

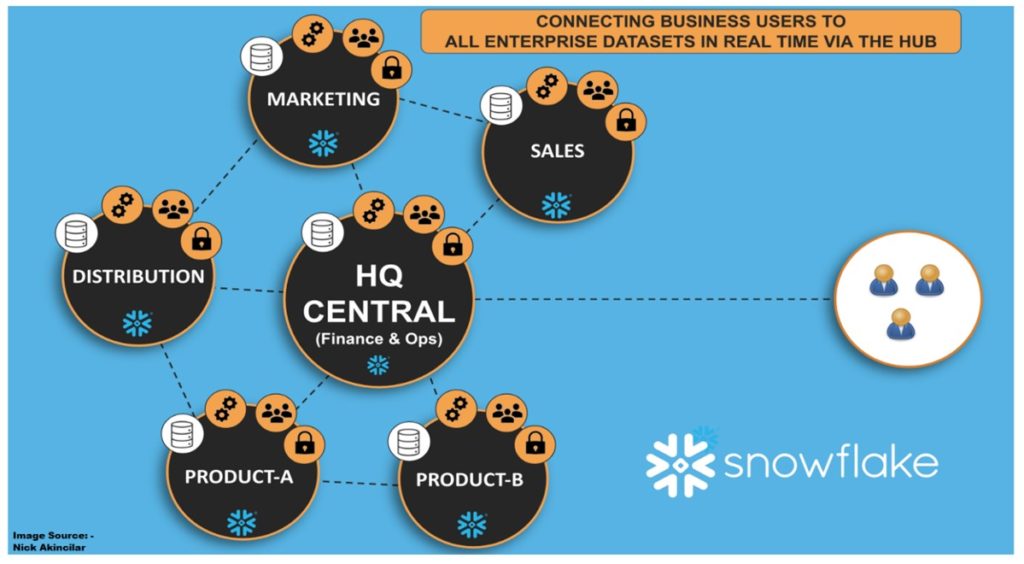

- Data Mesh Architecture in Cloud-based Data Warehouses